Power BI (Premium) now offers a native migration option to Power BI. I recommend first exploring that, before taking this approach. You can find more details here. Also read this blog around migration, how and why.

Over the last two weeks, I had the pleasure to work on an innovative case where we wanted to migrate on premises Analysis Services models to a Power BI Premium data model. Definitely an interesting case! Because of this project I finally found some decent time to investigate XMLA endpoints for Power BI Premium!

In this blog I will describe the journey we took to migrate one of the existing Analysis Services models to Power BI Premium and provide a step-by-step guide how to do this yourself.

Spoiler! You do not need a Power BI Premium P-SKU to get this working!

Considerations, Power BI Premium or Azure Analysis Services?

Before we jump into all the details, you might have a few questions at this moment. Below I describe a few of them.

- There is an Analysis Service model already, why migrate it?

The current model is an on-premises analysis services tabular model. Together with the client, we took this approach to explore their way to the cloud in a proof-of-concept. - Why do you want to move to Power BI Premium and not simply “lift & shift” to Azure Analysis Services?

In the announcements done by Microsoft over the last year, they state the Power BI Premium will offer a superset of what Azure Analysis Services has to offer today. With all the recent improvements in Power BI Premium and the main focus of the Microsoft team to have new developments in Power BI Premium before they are released in Azure Analysis Services, we decided to first look at Power BI Premium. Recent improvements such as XMLA endpoints are making a huge difference in this case.

If you want to learn more about the way forward according to Microsoft, I advise you to check the links to external content at the end of this blogpost. - Why using Tabular Editor and not deploy the model from Visual Studio to the Power BI XMLA endpoint?

We have tried that and bumped into a few challenges. It seems to be that Visual Studio and I are not friends and we have never been. At the same time, why use a heavy tool with expensive licensing if a lightweight, community driven free tool as Tabular Editor can do the job as well. - Why using Tabular Editor if there are tools like BISM Normalizer or ALM Toolkit that can do the job as well?

This is a legit one! During the proof-of-concept we have used both approaches. In our case we got everything working with Tabular Editor, but I cannot say that you have to use one or the other. I believe both approaches are possible. - Why should I migrate to the Cloud?

Scalable, innovation, future-proof and many more reasons. Still not convinced? I think we should have a different talk… 😉

Probably you can come up with many more considerations but let us move on and just give it a try!

The case

Since I do not want to use the customer case to show you the steps to take, I will use my own Azure Analysis Services model to migrate to a Power BI Premium data model. The approach for an on-premises model is identical to the approach with an Azure Analysis Services model.

My Azure Analysis Services Model connects to an Azure SQL Database that is hosted in Azure as well. Our goal is to migrate the model to Power BI Premium, including measures and all other model objects. In order to do this, we use Tabular Editor. If you are not familiar with Tabular Editor yet, please check the below webinar as an intro to the tool.

As a starting point we took the following assumptions:

- You have Tabular Editor installed on your machine

- You have a running model in (Azure) Analysis Services

- You have availability of a Power BI Workspace hosted on Power BI Premium Capacity.

- Your Power BI Premium Capacity has XMLA Read/Write enabled.

If one of the above assumptions is not applicable, please make sure you match these criteria before you move on. Enough side talk, let’s get things done! As said before, you might not have Power BI Premium. Please check the link at the bottom of this blogpost how you can use XMLA endpoints without Power BI Premium.

Let’s migrate!

Below I describe the process of migrating the model from Analysis Services to Power BI step by step. Please know that some of the below steps are unsupported! Use it at your own risk. I advise to try it with a copy of your model first.

1. Open Tabular Editor

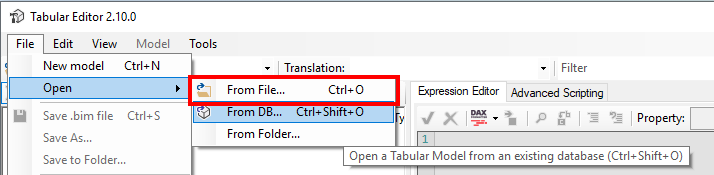

As soon as we have opened the application, we click File > Open > From DB (CTRL + SHIFT + O)

2. Connect to the Tabular Server

In the pop-up window, we connect to our existing (Azure) Analysis Services tabular model and click OK. In my case I use OAuth authentication to read my Analysis Services meta data. I am also listed as Analysis Services Administrator in the Azure Portal.

3. Choose database

After authenticating to the Analysis Services, we must select the Database we want to migrate. In my case I only have one. Please also notice the compatibility level here!

4. Model properties

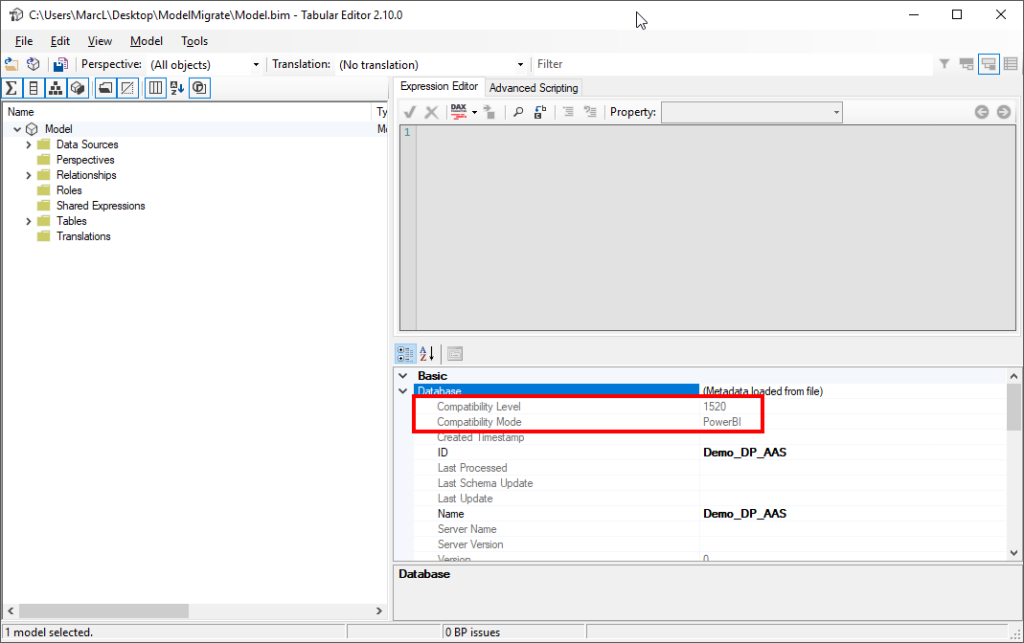

After we successfully connected, we see all our metadata in tabular editor. We do not really have to look at this right now. But notice that the compatibility level is also listed in the database properties.

5. Save Model.bim file

Please click File > Save As and save the Model.bim file on an easy to find location. You do not have to rename the file or anything like that.

6. Update compatibility level

Now, we must do one (unsupported) manual change to our Model.bim file. Please go to the file location and open the Model.bim file with a text editor. I chose to use Notepad++ for this.

In the text editor, we will see all the meta data of our Tabular model nicely formatted in JSON. Please change the compatibility level to 1500 or higher. In my case I use 1520. This is the compatibility level that is used by Power BI Premium. This change is required to move our model to Power BI Premium in a bit. More about compatibility levels in the links at the bottom of this blog.

7. Re-open the Model.bim

After saving the manual changes to the Model.bim file, please go back to Tabular Editor. In the top bar, select File > Open > From File and select the modified Model.bim file.

8. Ready to deploy to Power BI?

Check if your model is ready to be deployed to Power BI. Make sure you are not using unsupported features in your model that do not work (yet?) in Power BI Premium. Also confirm that the compatibility level is updated to 1500 or higher in the database settings (see step 4). Notice that the Compatibility Mode is now also changed to PowerBI.

9. Deploy to Power BI

After checking all the prerequisites, click Model > Deploy (F6) in the top header of Tabular Editor.

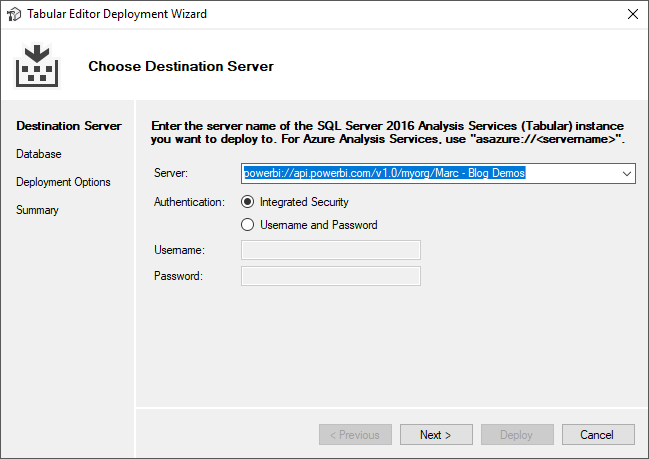

10. Tabular Editor Deployment Wizard

Please enter the XMLA endpoint for the Power BI Premium workspace in the Tabular Editor Deployment Wizard. The XMLA endpoint exists of a general part and the name of your workspace: powerbi://api.powerbi.com/v1.0/myorg/[workspacename]

After you have entered the endpoint, click next and authenticate with your Power BI username and password.

It might happen that you run in to an error because of duplicate workspace names (v1 and v2 workspaces). Please check the links at the bottom of this blog to see how you can get an appropriate XMLA connection string.

11. Choose destination

Now, we have authenticated to Power BI, we have to select the destination where we will deploy our model. You can either select an existing model that are in the workspace or create a new one. In my case I decide to create a new one and enter the Database Name at the bottom of the screen and click Next.

12. Decide what to deploy

Almost done, we only must decide what exactly we want to deploy. In my case I decide to deploy everything except Role Members (currently not supported) and again click Next.

13. Time to deploy!

Everything is configured and ready, time to deploy! In the final screen we get an overview of our configuration before we hit the Deploy button.

14. Deployment progress

After clicking the deployment button, we see a wheel spinning for a second. As soon as it disappears, the deployment is finished.

15. The final step!

There is one thing left we have to do after the deployment. We have only deployed the metadata but no data or connection credentials. We have to configure the credentials in the Power BI Service and hit the refresh button, similar to what we do with any other Power BI dataset.

That is all! Our dataset is now deployed to the Power BI Service and good to go! Now, we can start leveraging the full capability of Power BI Premium!

Wrap up and remarks

Well, in only 15 (baby) steps we were able to deploy our existing (Azure) Analysis Services model to Power BI Premium. As noticed before, Tabular Editor is only one of many tools that can do the job. Personally, I prefer Tabular Editor for many other reasons as well, like the best practices analyzer and because it is all community based!

Though, there are some things we have to take into account. XMLA Read/Write on Power BI Premium is currently (at the moment of writing in June 2020) in public preview. So, there might occur some issues. We are also doing some unsupported steps, especially the manual edit in the Model.bim file is not how you usually should do these kind of edits, it is a tricky step and mistakes are made easy. So please only do this in a copy of your model!

Another thing we should be aware of, is that our result will always be a split between data model and Power BI report, where this is normally packaged together in one single PBIX file. I think this is not a big deal at all and has a lot of advantages. More about that in an earlier blog about a multi-file strategy.

You might think, there is one step missing! What about my Power BI report? The report that previously connected to my (Azure) Analysis Services model, now needs to connect to my Power BI dataset. Since Power BI Desktop does not let you change this connection, how can I get this done? I can totally relate that you want to avoid rebuilding your entire Power BI report or even multiple reports. Luckily, the guys from PowerBI.tips came up with the solution for you. Read their blogpost about how to hot swap Power BI report connections.

Last but not least, you might think this only applies to Power Premium, so this is not for me? You are wrong! Please check out Just Blindbæk his blogpost about using XMLA endpoints without the need for a P-SKU!

Links

- Power BI blogpost, Power BI Premium and Azure Analysis Services

- Webinar Power BI Premium as superset of Azure Analysis Services

- Download Tabular Editor

- Enable XMLA Read/Write or your Power BI Premium Capacity

- Compatibility levels for Azure Analysis Services and Power BI

- Power BI XMLA Endpoint duplicate workspace names

- Move from a single to multi-file strategy

- Hot swap Power BI report connections

- Using the XMLA endpoint without Premium

Pingback: Bring existing tables to Power BI dataflows with Tabular Editor – Data – Marc

Can this be accomplished with PowerBI Pro too?

LikeLike

Hi Filip,

As I describe in the blog post, there is no need for a P-SKU. You can accomplish the same result by leveraging an Azure A-SKU for example. Check the last link at the bottom of the blogpost that will explain more about this.

Hope this answers your question 🙂

Marc

LikeLike

Great write-up… thanks! I tried this and while I like it, I don’t think I can use this approach because I cannot see how it would support Power Bi Incremental Refresh. Please correct me if I’m wrong.

LikeLike

Hi,

Not a 100% sure, but I believe if you remove the incremental settings, you can still move your content to a premium dataset and then reconfigure incremental refresh policies in the Power BI Service.

LikeLike

Hi Marc,

Thanks for this easy step by step blog for migrating Azure AS to PowerBI Premium.

I tried to follow the steps at my end to achieve the same but landed in an error.

All the steps worked fine for me accept the very last step #13.

when I click on the button “Deploy”, it runs for few seconds showing “Deploying” and then throws an the below error.

“Error occurred during deployment

M Engine error: ‘mscorlib; An item with the same key has already been added.’

Technical Details:

RootActivityId:05b06f04-be11-4bda-a181-921c0e84986d

Date (UTC): 7/23/2020 5:23:00 PM”

Can you please help me to understand the error and how to fix the same?

Thanks for you help in advance.

Abid

LikeLike

Hi Marc,

Thanks for posting this easy step by step guide to migrate Azure Analysis Services model to Power BI premium.

I don’t know if my previous query went through successfully or not as I don’t see that one on the blog right now.

I am re-writing my query.

I followed each step exactly the same way as you have explained and all the steps went through well except the last Step #13.

When I click on the button “Deploy”, it starts showing the message “Deploying” and then throws error in a pop-up window as given below.

“Error occurred during deployment

M Engine error: ‘mscorlib; An item with the same key has already been added.’.

Technical Details:

RootActivityId: 48479362-dcb6-454d-b7b6-97a2d0122c85

Date (UTC): 7/23/2020 7:42:00 PM”

Can you please help me to understand the error and how to fix this?

Please let me know if I need to provide any other details.

Thanks,

Abid

LikeLike

Hi Abid,

Too me this error looks like you’re trying to overwrite an existing model in the Power BI Service. Can that be correct? Please check step 11 to see if you create a new model in the service or try to overwrite an existing one.

Marc

LikeLike

Hi Marc,

Thanks for your quick response.

I am not overwriting any existing model in Power BI Service, as I pass a new name in step 11 every time I try to deploy.

Moreover when I try to deploy the same (after doing all the steps) to a Azure Analysis Server, it doesn’t throw any error and get deployed successfully. This confirm there is no issue in the model what I am trying to deploy on PBI server.

I guess, it’s something PBI Server doesn’t accept the model.

Thanks,

Abid

LikeLike

Hmm… That’s interesting. I haven’t had this experience so far and haven’t heard others about it.

Let me try to reproduce on my end.

LikeLike

Thanks Marc,

let me know if I can provide any other details which can help you to reproduce the error at your end.

Thanks,

Abid

LikeLike

I tried, but so far no “luck” every deployment succeeded for me.

LikeLike

Thanks,

not sure what is wrong at my end. The same model is getting deployed to Azure Analysis Server without any issue. So it confirms, there is no any code error or duplicate key existence in the model.

It’s throwing the error only when I am trying to push it to PBI server.

Thanks,

Abid

LikeLike

Hello Mohammad,

I’m facing the exact same error message. Was wondering if you ever managed to the issue in your environment?

Thanks!

LikeLike

Unfortunately not. Are there any specific data sources that are used when you run into these errors?

LikeLike

Hi guys, I was able to get rid of ‘mscorlib’ error by removing all of my Expressions from the solution. After this I got RLS error while deployment and needed to remove Administrator role (it’s not needed in premium anyway).

Basically, my way of solving this was to remove everything from the model step by step and checking if deployment was successful.

LikeLike

I am having the same error di you ever find a solution?

LikeLike

Is it possible to avoid manual actions in Power BI (configure auth)?

LikeLike

Hi Nikolay,

If you refer to authenticate to the sources after migrating, I believe this is possible using the Power BI REST API, not 100% though. You could have a look at the API library for data sources and datasets.

LikeLike

Hi Marc,

I experience the same problem with regards to the M engine error: mscorlib; An item with the same key has already been added.

There’s no current dataset in the workspace.

Only data sources available in the project are shared folders with excel files. Could that potentially be the cause?

Hoping there’s a solution to this, otherwise I’ll have to rebuild the whole project in PBI desktop once again (130+ tables, 1500+measures etc.).

Also, if you would like any more information please let me know!

Thanks in advance,

Ruben

LikeLike

Hi Marc,

Nice article 🙂

But have some issue, i can’t edit the credential, don’t have this line on my dataset settings. and have this message : Refresh can’t be scheduled because the data set doesn’t contain any data model connections, or is a worksheet or linked table. To schedule refresh, the data must be loaded into the data model.

any cloue about that ?

Thanks

LikeLike

Hi Nassim,

To me, this sounds like the queries where transfered, but the connection wasn’t. In the model, this is a separate object. Can you try this and see if this is the case?

Cheers,

Marc

LikeLike

Hi Marc ,

What do you mean by in the model ?

in my BIM file, i can see that i have the needed informtions :

{

“name”: “Adventure Works DB from SQL”,

“connectionString”: “Provider=SQLNCLI11;Data Source=XXXXX-XXXX;Initial Catalog=AdventureWorksDW2014;Integrated Security=SSPI;Persist Security Info=false”,

“impersonationMode”: “impersonateCurrentUser”,

“annotations”: [

{

“name”: “ConnectionEditUISource”,

“value”: “SqlServer”

LikeLike

Hey Marc,

super interesting read. Worked like a charm.

Unfortunately, I think this only works half way though. Without getting the .pbix file there is no way of setting up incremental refresh (https://docs.microsoft.com/en-us/power-bi/admin/service-premium-incremental-refresh)

and then setting up large datasets (https://docs.microsoft.com/en-us/power-bi/admin/service-premium-large-models), right?

You have an idea how that could be done? I’m afraid a proper migration can only be done if we can get our hands on the .pbix file somehow.

Thanks

LikeLike

Hi Lukas,

That might indeed be valid challenges.

My first thought, what about setting up large datasets with a published but empty pbix and then migrating your existing model? Did you try that? I haven’t done it yet, but I would love to hear more if you did!

Cheers,

Marc

LikeLike

Hi Marc,

great idea! I just tired that. Deploying into the empty pbix worked after updating my SSAS model to compatibility version 1520 and setting the defautpowerbidatasourceversion to v3 using TabularEditor.

Unfortunately after deploying I could no longer download the pbix file (it worked before).

Interestingly enough if you use deployment pipelines on that model and deploy it to test, you then can download the pbix file but something seems to be broken because the file fails to open with a “Sequence contains more then one element” message.

A next step could now be to unpack the pbix file and find and fix the issue in one of the files within. I’m pretty sure I won’t be able to do that, but I will start tinkering.

Let my know if you have any other ideas.

LikeLike

Hi Lukas,

We faced the same challenge. The dataset cannot be downloaded as a pbix that can then be further developed in Power BI Desktop. Have you experimented more with this? Did you find anything that can be updated in the unzipped pbix files so that the error disappears?

LikeLike

Hi Luiza,

Thanks for your comment. That’s known and also documented by Microsoft. For any xmla operation done directly on the service, downloading the pbix will not be possible anymore.

I believe this is also listed somewhere on the ideas forum to support download in the future. Please vote for it.

At the same time, it doesn’t hold you back from continuing your development over XMLA using tools like Tabular Editor.

Hope this helps,

Cheers, Marc

LikeLike

You are right Marc, I have now seen the limitation here (https://docs.microsoft.com/en-us/power-bi/create-reports/service-export-to-pbix#considerations-and-troubleshooting)

When it comes to editing a dataset that has been modified with XMLA endpoint and cannot be downloaded, what are the options? Tabular Editor as you detailed in this post (really looking forward to Tabular Editor 3), ALM Toolkit for deployment to the dataset when needing to deploy just some portions of the model? Visual Studio Analysis Services? But the compatibility level 1520 won’t allow the model.bim file to open. Any other tools that can be used at the moment?

LikeLike

Probably there are other tools, but the one you listed is what I mostly use 🙃

LikeLike

Hi Luiza,

I tinkered with that a little more but did not find a way to “fix” it.

Had to drop it for now unfortunately.

LikeLike

Hi,

nice article.

we are multiple people, who are working on the same datamodel.

the idea is to save the model.bim file. then track and sync it by git and load it into the local pbix file to work with the latest model. after finishing, we want to publish it to power bi service and the report creator can live connect and work with the provided model.

is there any way to update the pbix file with an updated .bim file? or is there only the way to have an online dataservice like azure or power bi premium to use the xmla connector?

the problem is i dont have azure or power bi premium. (only pro)

i tried to deploy a model.bim into the already opened power bi session. localhost:port.

but i get an error when i do this.

LikeLike

Hi Eliah,

This doesn’t work with the model.bim. Though you can have multiple copies of the pbix file, and use ALM Toolkit to merge the changes. I have to say, this is a manual process and can’t be automated (yet?) by checking in the file to git or whatsoever.

Hope this helps.

Marc

LikeLike

I did test the ALM Toolkit merge that you suggested Marc, and it does work on measures (yey!), but changes to tables (either power query or descriptions or format) are not allowed – ‘Unable to update table xxx because target is Power BI Desktop or .PBIT, which does not yet support modifications for this object type’.

Like you Eliah, we are trying to find ways for multi-developer on pbix files to become a reality (since we are used to this from .bim files), but haven’t cracked this mistery yet. I am hoping that it will become a reality at some point in the future.

LikeLike

Luiza,

i have found a workaround, which involves a little bit of manual work but for us it seems ok for now.

1. After you have done your work on the pbix file, you open the tabular editor and save the .bim file.

2. then track the .bim file with git so you have always the newest version. and it should also be merged by git if multiple persons made different changes. (if you have conflicts you have to merge this manually)

3. when you start to work again, pull the newest version of the .bim file and open your pbix in desktop (which hasnt the newest model information inside yet).

4. open the ALM Toolkit and select the .bim file as source and your opened pbix as your target. then update everything possible by ALM toolkit and refresh the comparison. after that, everything except queries should be updated (which is better then nothing i think 🙂 ).

5. then open the tabular editor and load the .bim file too. figure out wich differences still exists by using ALM toolkit. and copy paste the query code manually in your queries in your opened pbix. (why tabular editor? and not copy paste i out of the alm toolkit? yea unfortuantely the format inside alm toolkit is in the .bim format and not in power query m format.. in tabular editor you have the format you need)

i think this is just a vew minutes work when you begin your session and its worth it for us.

hope that helpes. (sorry for my rudimentary english)

best regards

Eliah

LikeLike

Thank you Eliah for sharing that! That is quite a good workaround! 😀 We will try your method until something better comes along!

LikeLike

Thanks for your comment Luiza. Indeed it doesn’t work for tables yet. This is a known limitation for any tool doing Tabular Object Model changes inside Power BI. Tables and sources are not (fully) supported yet. Maybe in the future? Who knows… 🙂

LikeLike

Marc – I converted a model to dataset as described but then I can’t edit it in powerbi desktop which was the whole point of using this tool. It appears like the result is the same as just deploying SSAS model from visual studio to powerbi – both result in “Transform data” option greyed out meaning you can’t get into power query. Did I do something wrong or is this just doing the same thing as visual studio?

LikeLike

That’s correct. After deployment over xmla, you cannot download the pbix any more. This is a known limitation.

–Marc

LikeLike

Hi Marc

Is your connection to the data source by default set to import? No option for direct query?

Br Els

LikeLike

Hi Els,

In my example it’s set to import indeed. Personally I prefer Import over Direct Query and that is the best practices according to Microsoft too. So far, I haven’t had a case like this with Direct Query sources to migrate. So honestly I’m not sure how that will work.

Cheers,

Marc

LikeLike

Hi Marc, when using SSAS Import method for Power BI, want to use DAX query to filter by company, date, columns I want ? Do you know how to do it ?

LikeLike

Pingback: Swap connection from Analysis Services to Power BI dataset – Data – Marc