Please also check the follow-up blogpost with all recent additions here.

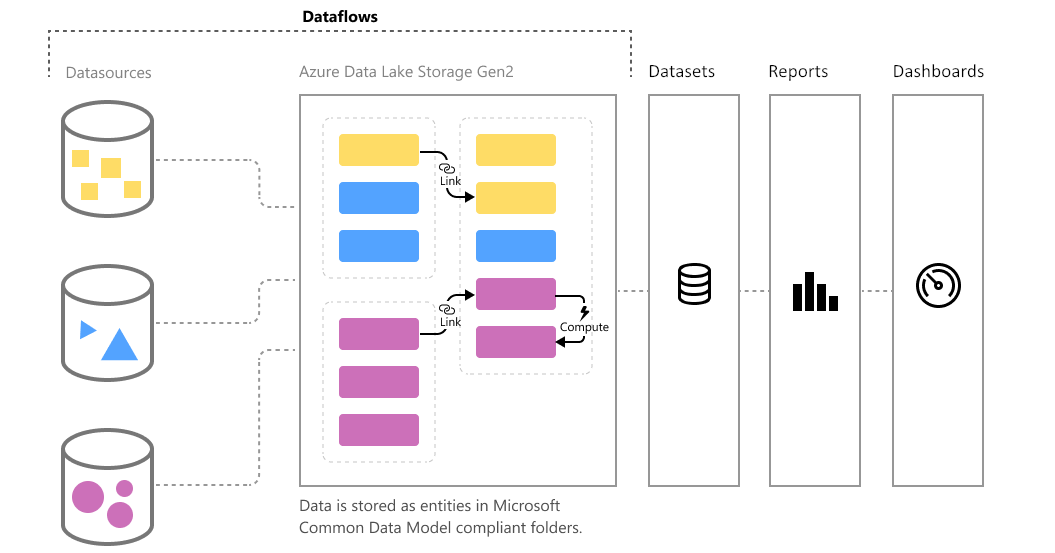

Dataflows are part of the Power BI service for a while now. More and more people are starting to benefit from it in multiple perspectives. The two most heard ones are re-use of data (and logic), but also isolate refreshes to avoid dependencies of refresh failures from different sources.

Same as for your data sources, you might have different workspaces setup in different stages of your DTAP development approach. In the past, I wrote a blog on how to work with a Multi-tier architecture and continuous delivery in Power BI. In this blog, I elaborate on setting up multiple workspaces for each stage of your DTAP approach.

Since we, as Power BI content developers, are rather lazy (or efficient, name it as you like), we don’t want to move all our content from one workspace to another manually. But how to handle this with dataflows since we don’t have a physical file which we can publish from one workspace to another? Let’s automate!

This time I’m not writing the blog alone, but co-authored with my colleague Ton Swart, who is an expert in automating stuff!

Not familiar with Dataflows yet? Take a look at the Microsoft documentation.

Extract the Dataflow definition manually

Dataflows can be easily built in the Power BI Service. Since the dataflow user interface isn’t as intuitive as Power BI desktop, I personally prefer to build the logic (Power Query) in Power BI desktop. After building, easily copy-paste the advanced editor code to a dataflow and you’re ready to go!

However, you want to avoid to copy-paste the full Power Query logic repeatedly to every workspace in your DTAP approach. Luckily there is a native option in Power BI which allows you to extract the dataflow definition to a JSON file. Follow the below steps to extract the dataflow logic

- Go to the workspace where your dataflow is stored

- Navigate through the dataflow section

- Click on the three dots of the dataflow you want to extract the logic from

- Click Export json

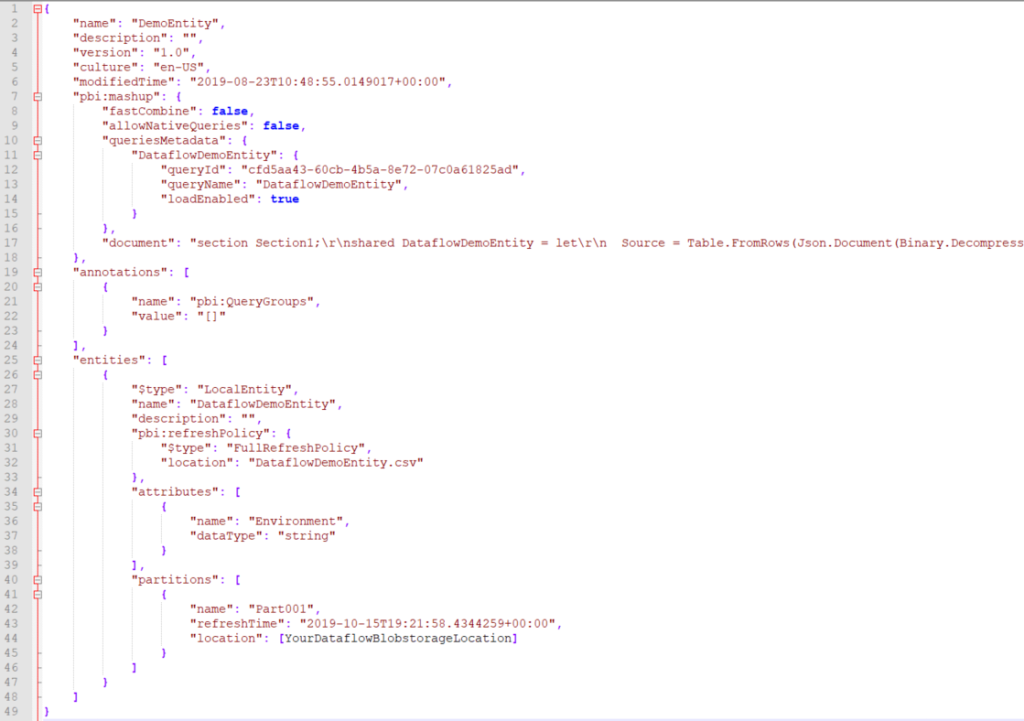

Looking at the extracted JSON, you will recognize that the Power Query code is included in the document section of the dataflow. Besides that, you may notice that there is a list of partitions in the bottom section of the JSON. Every partition represents a successful refresh of your dataflow and a stored dataset on a blob storage. The full location of the blobstorage is mentioned in the JSON as well. This location is not reachable unless your workspace is running on premium capacity and connected your own Data Lake storage gen2 as storage location for your dataflow.

Manually upload the json definition

After exporting the dataflow definition file, we want to move it to another workspace. Luckily, there is an option to import your dataflow definition manually into a new dataflow. As soon as we navigate through another workspace, we can easily apply the following steps:

- Navigate through your destination workspace

- Go to the dataflows section

- Click create, and click Dataflow

- Click the third option, Import Model

- Find the JSON file which you’ve saved when exporting the dataflow definition

- After successfully importing the JSON file, a notification at the right top will appear. Please click Edit Credentials. The credentials to access the underlying data source are not part of the JSON file and need to be entered again.

Automate with the Power BI REST API

Now, here it starts to become interesting. Let’s try to automate the manual steps to an automated process. To automate task in the Power BI service, we have to “talk” to the Power BI REST API. The Power BI REST API provides programmatic access to the report server catalog. For example, basic CRUD (Create, Read, Update and Delete) operations can be done on folders, reports, KPIs, data sources, datasets, refresh plans, subscriptions, etc.

We are using the “MicrosoftPowerBIMgmt” PowerShell module for communicating with the Power BI REST API. Forget the hassle of setting up the App registration within Azure Active Directory for API access, the Power BI PowerShell cmdlets take care of it for you. Just install and start using today!

Extract the Dataflow definition

To extract the Dataflow definition, we use the groups/{groupId}/dataflows/{dataflowId} API call. For this call we first have to figure out the “groupId” and “dataflowId“. So, we start with the groupId by connecting to the Power BI service and get the ID of the workspace “DP_DEMO_dev”:

Now, we have the groupId we can look for the dataflow definition “DemoEntity”. We’re getting all the dataflows from the workspace and filter the dataflow we are looking for. Because the dataflow call isn’t available as a cmdlet in the MicrosoftPowerBIMgmt module, we make use of the Invoke-PowerBIRestMethod that is available, to get all the dataflow definitions:

With these steps, we have the groupId and the dataflowId. With that, we can finally extract the dataflow definition.

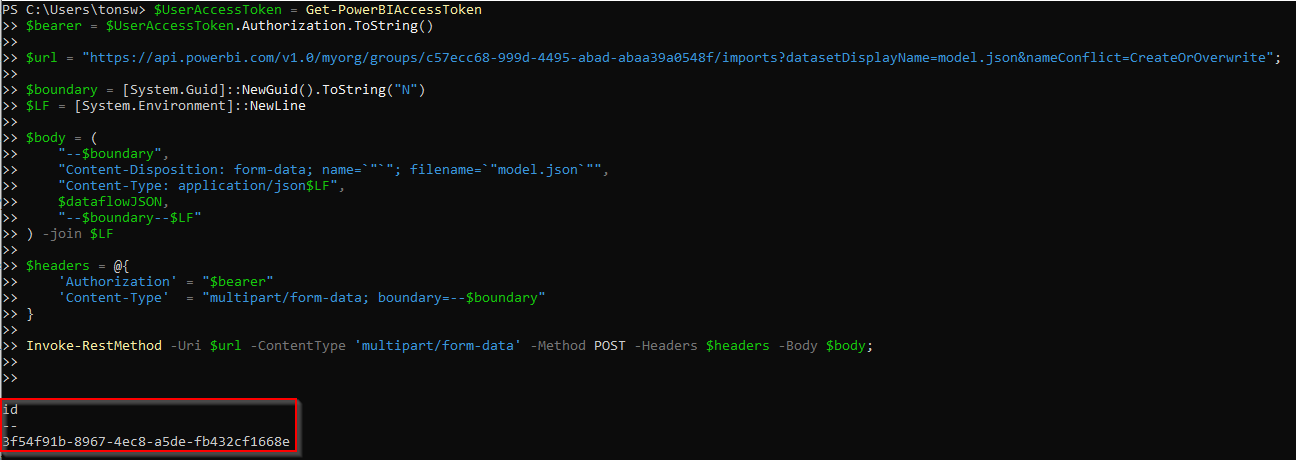

Import the Dataflow definition

Now, we extracted the dataflow JSON definition, we can re-use it to upload it to another workspace “DP_DEMO_acc”. Due to a small bug in the import REST API, we have to remove all the “partitions” properties from the JSON object before importing it to the new workspace. The manual import model in Dataflows does the stripping for you but the API does not. For importing the dataflow definition into the workspace we use the “Post Import In Group” API call. We format the request body with the dataflow definition and import it into the new workspace.

Wrapping things up

The result of the above described PowerShell script includes three parameters which needs to be filled to make this work for you.

- $SourceWorkspaceName = “[YourSourceWorkspaceName]“

- $DestinationWorkspaceName = “[YourDestinationWorkspaceName]“

- $DataflowName = “[YourDataflowName]“

As described above, these names will be used to lookup the corresponding IDs for further calling the APIs.

This approach to move your dataflows, helps you to professionalize your DTAP approach. Of course, you can move your dataflows manually as described above, but what about moving them automatically without doing manual tasks, or even make this part of your Azure DevOps deployment (blog on this topic coming soon!).

Find the end-result PowerShell script in below mentioned GitHub repository. Please, feel free to test, suggest and contribute to this repository as well!

References

- Microsoft Power BI Cmdlets

- Power BI PowerShell MicrosoftPowerBIMgmt

- Power BI REST APIs

- GitHub PowerShell script

Co-author

This blog is co-authored by my colleague, Ton Swart. Ton is a Data & Analytics DevOps Engineer at Macaw and has over 12,5 years of experience in IT. This includes roles as BI Consultant, Data warehouse specialist and IT consultant. His focus is on Business Intelligence. A few of Ton his expertise is CI/CD with Azure DevOps, PowerShell as automating Power BI deployments.

This blog is co-authored by my colleague, Ton Swart. Ton is a Data & Analytics DevOps Engineer at Macaw and has over 12,5 years of experience in IT. This includes roles as BI Consultant, Data warehouse specialist and IT consultant. His focus is on Business Intelligence. A few of Ton his expertise is CI/CD with Azure DevOps, PowerShell as automating Power BI deployments.

Pingback: Moving Dataflows Between Workspaces – Curated SQL

Pingback: Importing model.json to create a new dataflow – BI Polar

Hello,

I am trying to run the script to move the data stream but gives the following error:

Invoke-RestMethod: {“error”: {“code”: “DuplicatePackageNotFoundError”, “pbi.error”: {“code”: “DuplicatePackageNotFoundErr

or “,” parameters “: {},” details “: []}}}

At C: \ Users \ williancavalcante \ Downloads \ Power-BI-Automation-master \ Power-BI-Automation-master \ PowerBI_MoveDataflows.p

s1: 67 char: 17

+ … $ postFlow = Invoke-RestMethod -Uri $ url -ContentType ‘multipart / form- …

+ ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~ ~~~~~~~~

+ CategoryInfo: InvalidOperation: (System.Net.HttpWebRequest: HttpWebRequest) [Invoke-RestMethod], WebE

xception

+ FullyQualifiedErrorId: WebCmdletWebResponseException, Microsoft.PowerShell.Commands.InvokeRestMethodCommand

LikeLike

Hello, same error here DuplicatePackageNotFoundError.

LikeLike

Very informative article. Is this functionality available for Pro license users?

LikeLike

I suppose we dont have commandlets to change datasource after importing the json to a different workspace?

We use gateways for 1) dev 2) test and 3)prod and dataflows are built in dev workspace connecting to dev gatewat and dev datasource. When dataflow is being moved to test env we have to manually edit the datasource within dataflow json before importing. I dont see an option of paramerising datasource within dataflow either. Are there any alternatives to this approach?

LikeLike

At this moment there is no option to update the data source or parameter values API based. What I’ve done in the past, is a simple find and replace in the JSON but adding a few steps in the Powershell script.

Export > replace connection string > upload.

Hope this is an alternative for you.

LikeLike

Thanks Marc. Yes are currently doing export replace import json.

LikeLike

Hi Marc – I’m curious as to what you use to check a dataflow refresh has been completed?

one of the options is to export the json and get the refresh time as there is no get api call for a corresponding post api call (https://api.powerbi.com/v1.0/myorg/groups/{workspaceid}/dataflows/{dataflowid}/refreshes) available to get refresh history. Thanks.

LikeLike

Hi Rakesh,

Thanks for reaching out! Unfortunately the API call you’re looking for is not available, as you already said. The option you suggest is actually not that bad, but a pretty complex thing to do. Obviously, exporting the dataflow json definition is a heavier load than only the refresh history. I hope the API call we’re both looking for is there soon, so let’s vote for in on the Power BI Ideas forum:

https://ideas.powerbi.com/forums/265200-power-bi-ideas/suggestions/38235106-dataflow-rest-api-get-refresh-history

Cheers!

Marc

LikeLike

Rakesh, you can get refresh times with the “transactions” URI path. It is a bit inconsistent with datasets, but provides a similar result.

This wasn’t too hard to find. If you are doing software development from .Net, then I highly recommend the nuget: Microsoft.PowerBI.Api. This gives a standard programming interface layer that can Visual Studio can reflect on (giving intellisense and what-not). Compared to using a normal programming interface, I find that using a REST interface can be harder and the features are a lot less discoverable. Using a REST interface (directly) often feels very primitive to me – like programming in an assembly language. I’m not really sure why REST became so popular in the past decade, but I’m very thankful that people are starting to build standard client libraries once again, to place over top of the REST interfaces.

LikeLike

Hi everybody,

I noticed a lot of you received an DuplicatePackageNotFoundError. This is caused by the ImportHandlerMode that was set to CreateOrOverwrite. On first run, this returned the DuplicatePackageNotFoundError when the dataflow did not exist in the workspace yet. I have changed the default ImportHandlerMode to Ignore, which will run successful on first attempt.

Please know that this is only a quick fix. If you try to re-run the same code and overwrite the existing dataflow it does not ignore the existing dataflow, but returns an error that the dataflow already exists. I am in contact with Microsoft to get more clarity about the unexpected behavior for the ImportHandlerMode.

For now, you can continue leveraging the updated PowerShell script! It is available on GitHub again!

Thanks!

LikeLike

Hi Marc,

Thank you for this helpful blog. I frequently receive the error depending on the presence of an existing dataflow of the same name. Any response from Microsoft regarding the inconsistent behavior for the ImportConflictHandlerMode?

For now, it’s a bit cumbersome to switch from Ignore for the initial upload and then CreateOrOverwrite for future uploads. Also of note: the documentation (https://docs.microsoft.com/en-us/rest/api/power-bi/imports/postimportingroup) states that only Abort or GenerateUniqueName are supported with model.json but it appears we can sometimes use Ignore and CreateOrOverwrite depending on what’s already in the target workspace.

Thanks for any guidance!

LikeLike

Hi Nick,

No update with regards to the conflicthandlermode unfortunately. But thanks for bringing this up again. I’ll try it again to see if we can get a bit more clarity on this topic.

Marc

LikeLike

The PG has a bug ID for the problem with the REST API. The title of the bug is “REST API – imports with parameter CreateOrOverwrite throws PackagenotfoundError” and it was created on 5th Sept 2022.

Here is the Bug ID: 875909

LikeLike

Pingback: Why you should care about Power BI Deployment Pipelines – Data – Marc

Hi Marc,

Thanks for sharing! I’ve been able to successfully transfer data flows from 1 workspace to the other based on your suggestions. Data flows are linked to a Sharepoint location, which I’ve updated in the data flow to a new location. However for some reason it keeps both the original and the new source (old Sharepoint and new Sharepoint) in the data source connection/credentials. I’ve reviewed the details several times + I reviewed the .json as well and did not find any link to the old Sharepoint anymore. Any idea what I’m missing?

Kind regards,

Michiel

LikeLike

Hi Michiel,

How did you update the connection? What I did myself in the past, is a find and replace in the json, before uploading to the new workspace. That worked fine for me.

Cheers,

Marc

LikeLike

Hi Marc,

The connection was sitting in a parameter that I updated in the data flow after importing the json into the new workspace. I now tried your suggestion to update in the json file before updating and this did the trick!

Thx a lot for the suggestion!

Cheers,

Michiel

LikeLike

Great to see it worked out. Probably the connection is saved in different places, and therefore it still binds to the old source as well.

Marc

LikeLike

Pingback: Update: Move dataflows across workspaces – Data – Marc

Hi Marc,

thanks for sharing.

Anybody can help me on how to map the dataflow datasources to the on-prem gateway?

I’m not able to find a REST API for doing that and as a result I cannot complete the automation.

I.e. the dataflow is moved to the new workspace but can’t refresh because the mappings between the datasources and the gateway are missing.

Thanks

Stefano

LikeLike

Hi Marc, I am also facing the same issue with the automation that after creating dataflows, it is not mapping to the data gateway automatically. It looks like we need to manually map it and refresh works fine. Can you pls help us on this?

LikeLike

Hi,

I’ve been searching, but apperently this API does not exist. Meaning, you have to assign the dataflow to the gateway connection once, after which you can continue to update the dataflow definition.

–Marc

LikeLike

I’m trying to do this to get a dataflow definition from one workspace and create a copy on another one.

As far as i checked the code is fine, but i get the error:

{

“error”: {

“code”: “ImportUnsupportedOptionError”,

“pbi.error”: {

“code”: “ImportUnsupportedOptionError”,

“parameters”: {},

“details”: [],

“exceptionCulprit”: 1

}

}

}

Is this something related to permissions? I’m using a service principal account for authorization.

Thanks!

LikeLike

Hi Bernardo,

I think the SPN you use for authentication is the problem. The account/SPN used to upload the dataflow will become the owner. Unfortunately SPNs cannot be owner of a dataflow. Can you try with your user account and see if that works?

–Marc

LikeLike

Same error, very strange…

LikeLike

Hi!

You used the powerbi cmdlet until the import api, is there a reason why? Is it possible for you to provide an example of calling the import api with the powerbi cmdlet instead of the invoke-restmethod call?

I tried myself to use Invoke-PowerBIRestMethod but I keep getting errors.

LikeLike

Used the cmdlets as it is easier from the PowerShell module directly until the point where it doesn’t allow us anymore to use that. The import API is a bit more flexible as that allows to import pbix files as well as json definitions of dataflows. Therefore we switch over to the API call.

At the time of writing this blog, the cmdlets didn’t allow us to import the dataflow definition. In all fairness, I didn’t try lately, but you could try to see if that works now?

–Marc

LikeLike

Pingback: Power BI Dataflow Import API – How to deal with sp… – Quant Insights Network