As a Data & Analytics consultant, working on tons of different projects, it is very likely that I’m the creator and owner of a whole lot of dataflow and dataset refreshes. Since these refreshes can only be managed by the owner, this limits the rest of my colleagues from updating the scheduled and maintaining the solution. Working with service accounts can help us here. At least, it is easier to centrally manage the dataflows when you are working from a center of excellence or competence center perspective.

All content which require a refresh, both dataflows and datasets, can only be managed from the workspace perspective. There is no place to have a clear overview of all refreshes scheduled with your own account or a service account. Managing all of them in one central place, will be a very welcome feature!

However, there is already an idea listed on the Power BI forum (please vote for it!), we can easily build a solution ourselves to manage all our refreshes. In this blog I will elaborate on how you can setup your own centrally managed refresh policies by using:

- Power Automate, to trigger the right (API based) actions on a recurrence.

- SharePoint lists, so set and save our refresh schedules.

- Power BI REST API, to trigger the refresh for both dataflows and datasets.

- Power Automate custom connector, to perform an API call easier to the Power BI REST API.

Power Automate and Power BI

Power Automate has two different ways of interacting with Power BI. First, is event triggered actions, where a flow starts running if a defined value is exceeded. This interaction is only the trigger for a flow. Read more about that here. Second, there are also Power BI related actions available in Power Automate. For the managed refresh flow, we need a Power BI action to be triggered based on other settings.

Currently, the native Power BI actions are in preview, but also a bit limited to be honest. The native actions only support the following operations:

- Trigger a Power BI dataset refresh

- Add rows to a Power BI dataset.

Looking at our use case, we want to do a lot more than only triggering a dataset to refresh. For example, dataflows can’t be triggered to refresh based on these actions. To solve this challenge, we created a custom connector, which enables us do everything the Power BI REST API supports.

In Power Automate, you can create HTTP calls for every API call you want to do as well. However, this requires a lot more work (and rework), every time you want to call this API. The custom connector can help to keep our flows simple and clean and re-use API calls in different flows as well. Ida Bergum, wrote a great blog on creating a custom connector for Power BI API calls in Power Automate.

Based on this custom connector setup, we will be able to call a whole lot of different action, which are supported by the Power BI REST API. Besides the dataset, we can call a dataflow refresh as well now.

Centrally manage refresh schedules

Since we decided to have one central place to manage all our Power BI refreshes, we need to store the schedules somewhere. The easiest solution for this, is to simply create a SharePoint list for that.

Looking at the input values for the Power BI REST API, we need at least the groupId (workspaceId) and the dataflow or dataset Id in order to trigger the refresh.

POST https://api.powerbi.com/v1.0/myorg/groups/{groupId}/dataflows/{dataflowId}/refreshes POST https://api.powerbi.com/v1.0/myorg/groups/{groupId}/datasets/{datasetId}/refreshes

Besides that, we must save the refresh schedule as well. Since one of our goals is, to have one central place to save our schedules, we setup a SharePoint list with the following columns:

- Title, free text to give some more context to the item represented.

- WorkspaceId, workspace where the dataflow or dataset is part of.

- ItemType, dropdown to select if the item represented is a dataflow or dataset.

- ItemId, the Id of the dataflow or dataset, based upon ItemType.

- RefreshSchedule, list of hours when the refresh needs to be triggered.

Power Automate setup

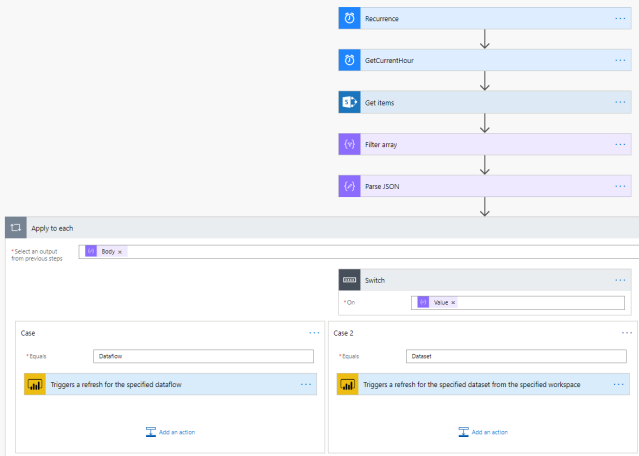

In Power Automate, everything will be brought together. In short, we need to read all items in the SharePoint list, compare the schedules with the current time and if needed, trigger the refresh based on the previously set Power BI REST API custom connector. In this setup, we will schedule the flow to run every hour, so that it will trigger the refresh for a dataflow or dataset every hour if scheduled for that time.

Check scheduled refresh hours

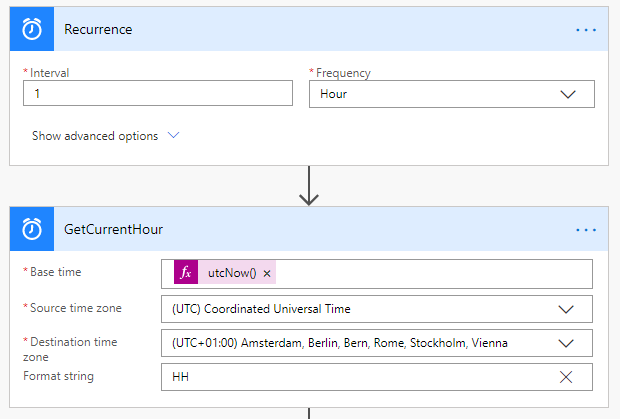

There are multiple ways in Power Automate to retrieve the current hour. In this example, we will use the action to convert a specified time from timezone. This is done on purpose, so that we are 100% sure that we trigger it based on our local time zone.

The below setup will run every hour and return me the first characters of the current time. For example, if it is 16.39 (4.39 PM), it will return 16. In the next steps, we need the output of this step to define which items needs to be refreshed.

Get schedule and split based on dataflow or dataset

Next up, is getting the SharePoint list in the flow. We will do this with the action Get items from a SharePoint list. This will return all items in the previously created list as a json object.

Since the list of managed refresh items can become very long, we filter it down to only the items that needs to be triggered. This will result in a smaller set of items and performs better in the rest of our flow. Because the returned value of the previous step is a json, we will use the operation Filter array for this step.

In this step we used the Contains filter, because possibly there are multiple hours listed in the column RefreshSchedule, representing multiple hours that the specified item needs to be refreshed. The result set of this step is a filtered set of items based on the current hour. The column RefreshSchedule is filtered by the result of our earlier step where we converted the time.

Next, we want to Parse the json object. This will help us to make the json better readable in the next steps and have all objects available in the next steps. Please run the flow once, to generate an example output from the Filter step and insert this as a sample to define the json schema. Please, copy and paste the result of the test run also to a notepad for later use.

The resulting json might look something like the below example.

[ { "@odata.etag": "\"5\"", "ItemInternalId": "73", "ID": 73, "Title": "[ItemTitle]", "OData__x0072_p24": "[WorkspaceId]", "ItemType": { "@odata.type": "#Microsoft.Azure.Connectors.SharePoint.SPListExpandedReference", "Id": 0, "Value": "[ItemType]" }, "ItemType#Id": 0, "twiu": "[ItemIdFromList]", "f6h8": "17", "Modified": "2020-01-12T16:02:20Z", "Created": "2020-01-12T15:27:45Z", "Author": { "@odata.type": "#Microsoft.Azure.Connectors.SharePoint.SPListExpandedUser", "Claims": "i:0#.f|membership|[YourEmail]", "DisplayName": "[YourName]", "Email": "[YourEmail]", "Picture": "[SharePointUrl]", "Department": "[DepartmentName]", "JobTitle": [JobTitle] }, "Author#Claims": "i:0#.f|membership|[YourEmail]", "Editor": { "@odata.type": "#Microsoft.Azure.Connectors.SharePoint.SPListExpandedUser", "Claims": "i:0#.f|membership|[YourEmail]", "DisplayName": "[YourName]", "Email": "[YourEmail]", "Picture": "[SharePointUrl]", "Department": "[DepartmentName]", "JobTitle": null }, "Editor#Claims": "i:0#.f|membership|[YourEmail]", "{Identifier}": "[SharePointListUrl]", "{IsFolder}": false, "{Thumbnail}": { "Large": null, "Medium": null, "Small": null }, "{Link}": "[SharePointUrl]", "{Name}": "[ItemTitle]", "{FilenameWithExtension}": "[ItemTitle]", "{Path}": "Lists/[SharePointListName]/", "{HasAttachments}": false, "{VersionNumber}": "1.0" } ]

Trigger for dataset or dataflow?

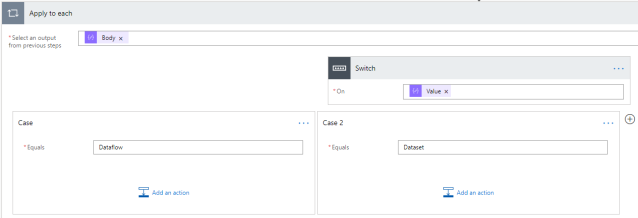

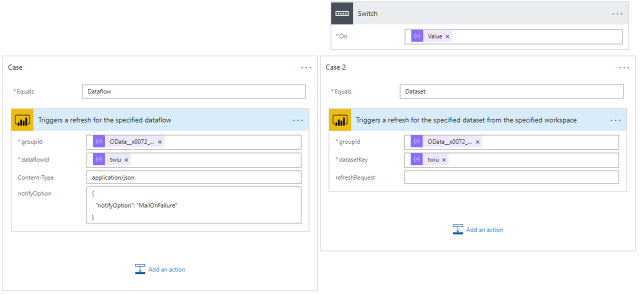

In the flow setup we make use of the Power BI REST API, which has a different API call for dataflows then for datasets. Because of that, we want to perform a different operation in the flow depending on the item. We perform this action with the earlier created custom connector for the Power BI REST API. To perform different operations depending on a dataflow or dataset, we need the switch operation. This operation will be applied based on the ItemType column and represents whether the item is a dataflow or dataset.

As soon as we select the column ItemType as our input value for the switch, Power Automate will automatically add the Apply to each up front. This is needed to run this switch operation for every item in the resulting set. You may notice that the full Body object from our json is performed as the input value for the Apply to each operation.

Now, we can perform different actions based on the Value of the ItemType. Finally, we apply the operation from the before created custom connector to refresh the dataflow and dataset.

Notice that the column names in the json can be a confusing, since these are based on the SharePoint list technical names. Earlier, we copied the result of a test run to a notepad. Have a look at the notepad to get the right column names as input. In my case (and the above sample json), we must use the following columns:

- WorkspaceId = OData__x0072_p24

- ItemId = twiu

The setup of the switch operation will look as shown below.

With this last step, we finished the setup for our flow. It is time to trigger the flow to run. Please know, that the number of refreshes is still limited depending on the license you have (e.g. 8 times a day with Power BI Pro and 48 times a day with Premium).

Further improvements and known limitations

This above setup works perfect to create a better manageable refresh setup. I’m using it at several customers to manage all our refreshes for our Competence Center owned content. Though, there are some considerations, remarks and possibilities to extend this solution which I would like to point out.

- API authentication, the Power BI REST API, configured in the custom connector, is called by a user account. After you added the Power BI custom connector actions in the flow, you needed to authenticate through the connector with OAuth authorization. With this setup, it is required that the account running this operation has the right privileges on workspace level in order to trigger the refreshes.

- Scheduling of the refreshes, the current setup triggers on daily bases and only once an hour. With more complex logic, you can extend this solution with for example exact timings, triggers on specific days of the week or other time related complexity. This is just a beginning of what is possible by combining the full potential of the Power Platform.

- Error handling, in the unwanted situation that one of the triggers fails, for example due to workspace privileges or you have reached the maximum number of refreshes (based on your license), the trigger operation will return an error. It is possible to capture the error message in the flow. With that, you can send a notification or email to the owner of the flow for example.

- Native Power Automate functionality, specific privileges and some additional work are needed in order to setup the custom connector for the Power BI API. It would be much easier when the native Power BI actions in Power Automate will be extended with a native trigger for dataflow refreshes. I have posted the idea on the forum, please vote for it!

- Use a calendar to schedule your refreshes, Chris Webb wrote a great blog post on a similar setup where he used an Outlook calendar to schedule the refreshes. A really nice alternative for the SharePoint list. Find his blog here.

Last but not least, below a full overview of the flow, as configured in the above steps.

Pingback: Monitor your On-premises data gateway with Azure and Power BI – Part 1 – Gateway up-time – Data – Marc

Pingback: How to keep your Power BI dataflows organized and optimized – Data – Marc

Pingback: Refreshing Power BI Datasets & Dataflows with APIs and Power Automate – Olivier Travers

Awesome Marc, Great article… Iam adding your blog to the list of my study references

LikeLike

Lots of broken links in this article…be great to get them updated if there’s replacement sites for them. So what am I missing? You jumped into what power automate does but what is it? Is that a tool you download from github or? Thanks

LikeLike

Thanks for flagging Gary. Will update it soon🙃

LikeLike

hi, is there a way to know whether a dataset refresh was successful, as there is for dataflows? if the dataset fails to refresh in a flow, an email is not sent by the Service. thanks!

LikeLike

Hi,

Not sure if I fully understand your use case and why you shouldn’t receive a mail on failure. You can configure in scheduled refresh who should receive a mail upon failure.

Nevertheless, if you’re familiar with APIs, have a look at this one:

https://docs.microsoft.com/en-us/rest/api/power-bi/datasets/get-refresh-history-in-group

–Marc

LikeLike

Hi Marc, there is a trigger available in Power Automate (PA) called “When a dataflow refresh completes” and it returns Success or Fail. It can be used, for example, to initiate a dataset refresh if the dataflow refresh completes successfully. My question is whether you know of a similar trigger for datasets (or perhaps a different technique for achieving the same).

One good reason to have it is that dataset refreshes that you start in PA and fail do not generate failure emails. This is different from what happens in the Service, where you get failure emails when a dataset refresh fails.

Thanks,

Adolfo

LikeLike

I suggest to use this API: https://docs.microsoft.com/en-us/rest/api/power-bi/datasets/get-refresh-history-in-group?WT.mc_id=DP-MVP-5003435&

With that, you can see the refresh history, so you can check whether it was refreshed in the past hour for example. If you build a loop that keeps checking the status of the refresh in the history, you should be good to go.

–Marc

LikeLike