The first post after my vacation. I was in doubt for a while whether I should write this one or not… given the preview state. Since one of the customers I work for asked me for advise today about Power BI Datamarts, I thought lets do it! They convinced me that it would be a valuable blog for many. I hope for you too.

What is this blog about? I will explain my first experiences with Power BI Datamarts which is currently in preview state. I will elaborate on my test cases, the value I see in Datamarts but also some open ends and where you can potentially position it in your Power BI solution architecture.

Introduction to Power BI Datamarts

When I started my own experiences with Datamarts, the first thing I did was googling for content of others, like many other people most likely will do. Two well-known sources bumped up directly, from famous people in the community. First of all, Guy in a Cube, where Adam and Patrick showed what Datamarts are in one of their videos.

“A Datamart is the access layer of the data warehouse environment that is used to easily analyze and distribute data to the users.” – Guy in a Cube

Secondly, Matthew Roche, Program Manager on the Power BI team who blogs on SSBIpolar.com also shared his perspective on Datamarts. Here I also want to quote Matthew, as I tend to agree with his statement.

“Power BI Datamarts are like dataflows with benefits” – Matthew Roche

Then obviously I looked at the official documentation about Datamarts, which clearly tells us what are Datamarts but also what is it not. To finally quote a part from the documentation:

Datamarts help bridge the gap between business users and IT. Datamarts are self-service analytics solutions, enabling users to store and explore data that is loaded in a fully managed database. Datamarts provide a simple and optionally no-code experience to ingest data from different data sources, extract transform and load (ETL) the data using Power Query, then load it into an Azure SQL database that’s fully managed and requires no tuning or optimization.

What are Datamarts?

Datamarts can be seen in various solution areas, being:

- Self-service relation database capabilities without IT involvement, as you can easily spin up Datamarts in the Power BI Service, without the need for an Azure Subscription or anything.

- No-code experience for data ingestion, preparation and transformation, where Datamart creators can benefit from the knowledge they already have about Power Query in building dataflows and datasets with Power BI.

- Building semantic models in a holistic cloud experience, where basic modeling capabilities like building relationships, basic DAX measures and row-level-security can be done in the web editor and there is no further need for Power BI Desktop or other client applications.

What are Datamarts NOT?

Now we have a better understanding what Datamarts are, it is also important to understand what it is not and where it will not help you.

- Datamarts are not a full-blown replacement for Power BI datasets like we know them nowadays with enhanced features like aggregations, more complex DAX and object-level-security.

- Datamarts are not a replacement for dataflows, as they serve different needs. Dataflows will still be used to load centralized dimensions for reuse in multiple data models across your Power BI ecosystem and easily share them with others.

- Datamarts editor will not be a replacement for Power BI Desktop, simply as not all features are covered like building user defined aggregations, more complex DAX expressions or security setups to just mention a few things.

- Datamarts will not replace your existing data platform or enterprise data warehouse. Simply as it is intended for self-service needs and is less scalable for extremely large volumes. Also Datamarts are delivered in a SaaS offering, where a data platform typically comes as PaaS offering where architects and engineers define pipelines for data ingestion and configure for specific security requirements, like VNETs, which is not possible with Datamarts today.

Where do Datamarts fit in your architecture?

So far, many things that we already learned about Datamarts. Though, if you are designing Power BI solutions, you might question yourself now, where do Datamarts fit in my solution architecture? I think there are a few use cases that can help you to understand where Datamarts can come in useful.

Larger scale than datasets only

In case you are building a solution, where you need larger scale and you can achieve with using Power BI datasets alone, you can consider to first build one or multiple Datamarts to create small reusable artifacts that can be used across many solutions. This has many similarities of what we used to do with dataflows, but now with all the additional features of Datamarts such as Row Level Security. With a Datamart, you will get an auto-created dataset. This dataset directly connects to your Datamart which allows you to build new reports on top directly.

Furthermore, you can allow others to use your Datamart as a starting point, but enrich it with their own data in Power BI Desktop for example. Power BI Desktop nowadays has a default connector to Power BI Datamarts. If you use this connector however, you are connecting to the auto-generated dataset without noticing. So in fact you are not connecting to the Datamart, but the dataset instead. You can see this by looking at the lineage view in the Power BI Service. The cool thing is, that as an author of the Datamart, you build the entire solution in one interface and did not use Power BI Desktop. So all your users whom reuse your Datamart will benefit from pre-created measures and relationships that you defined, as you know the source of data the best!

Ad-hoc analysis

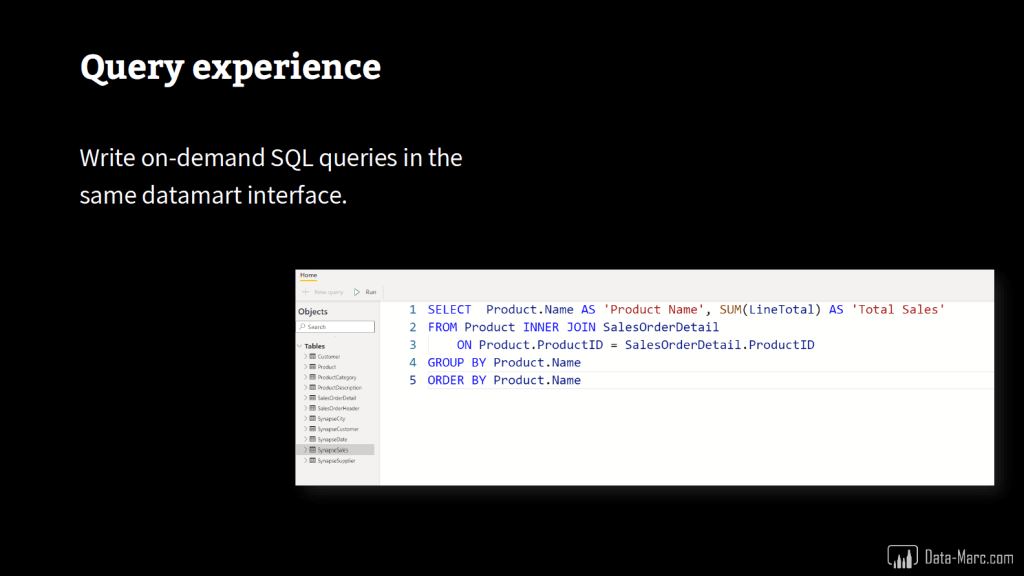

Also, ad-hoc query experiences can be achieved by either using the visual query editor like we know from Power Query in dataflows.

But alternatively, you can also directly write your SQL expression in the Datamart experience. Which opens up Power BI for many new users who are comfortable with SQL, but not so much with Power Query or DAX. I think this is a huge benefit!

Sharing secured dimensions

Not too long ago, I wrote a blogpost about sharing dataflows from a single workspace. In that blog I explained how you can build a dataset with object-level-security to only share those few dimensions relevant to others, without giving them workspace permissions. Datamarts might also be an alternative solution in this scenario. Although do not support object-level-security today, you can set up a Datamart where you ingest data from various sources, define row-level-security on top and then share the Datamart with others.

Connect with a SQL endpoint for reuse outside of Power BI

If you have users in your organization who are less mature with Power BI and want to use your Datamart elsewhere, or your central data platform team wants to leverage the output of the logic you defined, Datamarts might also be an option. Datamarts come with a SQL endpoint, which allow others to connect to this endpoint from other tools if they want. This is a huge potential for reuse in for example the data platform. However, during preview the supported SQL clients are limited to SSMS and Azure Data Studio.

Magic uncovered?

When I was trying Datamarts for the first time, there were no APIs or anything like that to interact with Datamarts. People who know me longer than today, know that I will not stop by just reading documentation. I went for it and executed some random API calls to see what is inside my Power BI workspace after I created a Datamart.

By using a simple PowerShell script, I executed the query to list all dataflows in my workspace and to my surprise, there were actually dataflows that I did not create. Also, these dataflows are not visible in the Power BI Service interface.

Once I found this, I wanted to have a closer look at these dataflows in specific. I found that this hidden dataflow includes exactly the tables that I used in my Datamart, which made me conclude that it must be the same.

In case you want to try-out this little trick yourself, below script is the one I used. In order to execute this, you need to install the Cmdlets for Power BI first.

# Define workspace Id

$WorkspaceId = "{Specify your workspace Id here}"

# Sign in to Power BI Service

Connect-PowerBIServiceAccount

# Get all dataflows in workspace

$ListDataflows = Invoke-PowerBIRestMethod -Method GET -Url https://api.powerbi.com/v1.0/myorg/groups/$WorkspaceId/dataflows | ConvertFrom-Json

$ListDataflows.value

# Get dataflow source

$DataFlowObjectId = $ListDataflows.value.objectId

Invoke-PowerBIRestMethod -Method GET -Url https://api.powerbi.com/v1.0/myorg/groups/$WorkspaceId/dataflows/$DataFlowObjectId/datasources

Shortcomings

As Datamarts are still in public preview during writing this blog post (August 2022), there are some limitations that come with Datamarts. Obviously, Microsoft is curious to hear our feedback about what we think is missing in Datamarts and where they can improve. In this section I will highlight a few of the current shortcomings in my opinion.

Total data volume

According to documentation, Datamarts should support up to 100GB of data at this point in time. I would expect this limitation to be lifted before Datamarts will become general available. However, in my test runs, I managed to load 163GB of data. Below image shows the total data volume processed in Azure Synapse Analytics (top screenshot) and the successful refresh of the Power BI Datamart (bottom screenshot).

Datamarts and deployment pipelines

Deployment pipelines in the Power BI service already support Datamarts, which is great news! The Datamart shows up in the interface and can be deployed using the pipeline. However, the auto-generated dataset does not show up in the deployment pipeline, which is a bit unexpected as it does exist in the workspace. Despite it now showing in the pipeline, it is deployed or maybe even re-generated after deployment.

DAX measures in Datamarts

It is even possible to create DAX measures in Datamarts, which then appear in the auto-generated dataset. Big kudos for the team for bringing the DAX capabilities to the Power BI Service! I think this is a huge step forward. Even better, you can even write DAX measures from the model view, which is impossible in Power BI Desktop today.

If you are writing a DAX expression in a Datamart, there is no way to validate the output of this measure. Potential work-arounds could be:

- Having a Power BI Desktop instance on the side, where you refresh the model after creation of a Measure and put it on the screen in your report to validate. This might result in pinging back-and-forward a lot.

- Having a report open in the Power BI Service, connected to the auto-generated dataset to test the new measure. Keep in mind that you mind need to refresh the cache before the measure appears in the Fields pane on the left side.

Unknown capacity utilization during preview

During the preview period, Power BI Datamarts are offered for free. You do require to have Power BI Premium (P-SKU, A-SKU or PPU) to get started with Datamarts, however the compute of your Datamarts will not be charged as capacity utilization.

Of course, this is a great offer! But as I’m also a capacity administrator and trusted advisor for my clients, I have my doubts about this at the same time. Although the utilization is not charged on the capacity, it would be very welcome to see the impact on the capacity if it was charged. Cause now, capacity administrators are in the dark and have no clue about the future impact of Datamarts to their capacity. As potential risk, users in your organization start creating new Datamarts now and build business-critical solutions based on this new technology. But at the time Datamarts become general available, it will be charged as part of your premium capacity. As there might be business-critical solutions at that time, there is no way back! Only way forward is upgrading your capacity when the contribution makes you reach the capacity limits.

My expectation of the capacity utilization by Datamarts is significant. Typically, the main utilization on a capacity is by report interactions (queries) and by performing refreshes. Both happen with Datamarts, as users will build reports on top of your Datamart, maybe even build their own data models on top and you will schedule refreshes to keep the data up to date. Though, this is just an assumption and not based on any information available at the moment of writing this blog.

Govern Datamart creation

This brings me to the last point. At initial release of Datamarts in preview, Power BI administrators could only open up Datamarts to the entire organization. There was no way to limit this by a security group. Luckily Microsoft picked up the feedback provided by many! Because with a recent enhancement, it is now possible for Power BI administrators to define a security group to limit who is allowed to create Datamarts in an organization.

Wrap up and conclusion

Coming to a wrap up, I think Datamarts can be considered as dataflows on steroids, or as Matthew Roche said, “Dataflows with Benefits” if you will. There are definitely similarities between both and we have learned that the back-end of Datamarts is actually dataflows. Especially functionality like Row Level Security and the ability to pre-define measures in a Datamart will open up many new possibilities!

Next to the additional functionalities, I think there is a huge new group of people that might start working with Power BI, since they can now write SQL queries against a Power BI artifact. There is no need to learn DAX or Power Query (M) for those who are already familiar with SQL and want to do some ad-hoc analysis.

The SQL endpoint of Datamarts also brings up new future potentials, where data from Datamarts can be loaded into data platforms directly. In that potential scenario, Power BI might be considered more like a ETL tool where business users can add their business logic easily in an interface before it is inserted in the central data platform.

Last but not least, a word to the Power BI tenant administrators. There were some reasons to not open up Datamarts for your organization. As I explained above, there is unclarity about what the impact on your Premium capacities will be in the future. However, with the recent enhancement in tenant settings, you have everything at your hand to control and govern the creation of Datamarts. Make sure you create that security group and allow your power users play around with these new functionalities and provide you (and Microsoft) feedback about the value of Datamarts for the organization.

Similar to what I described once in a blog about a phased roll-out of Power BI features. Make sure power users are aware of the risks of working with previews, like preview features are still in development, adapted to change or might behave inconsistently.

I’m curious to see where Microsoft is heading towards with Datamarts! Let’s hope the future is bright! 🙂

Reblogged this on ERP and BI.

LikeLike

I am looking for documentation on Datamarts being loaded into an Azure SQL Database. I need information on the how, where and security. Is it a shared resource pool where ‘outsiders’ can access data?

Do you know of any resources that can help answer these questions?

LikeLike

Hi Cody,

Found this in the documentation:

Datamarts are a fully managed database that enables you to store and explore your data in a relational and fully managed Azure SQL DB. Datamarts provide SQL support, a no-code visual query designer, Row Level Security (RLS), and auto-generation of a dataset for each datamart. You can perform ad-hoc analysis and create reports, all on the web.

https://docs.microsoft.com/en-us/power-bi/transform-model/datamarts/datamarts-overview?WT.mc_id=DP-MVP-5003435#comparing-dataflows-to-datamarts

Your question with regards to shared resource pool etc, is not in this documentation and I’ve not read it. But to do some assumptions, I would guess it is secured in a similar way as Power BI in total is today, where your dataflows are saved on a fully managed data lake for example. Power BI (Pro) as SaaS offering is a managed solution by MSFT, where all these kind of things are handled for you. I expect the same for Datamarts at this point in time, also given the 100GB limit during preview.

Once it reached GA, it might be different and the Azure SQL DB might be on the same cluster as where your Premium capacity runs, since you need Premium anyhow for Datamarts.

Once again, all assumptions and just guessing. If you really need an answer, I suggest you reach out to MSFT. 🙂

–Marc

LikeLike

Thank you for the information. I share the same assumptions but really need to get confirmation.

Thank you.

LikeLike

Cody, it does create an Azure SQL Database, but it’s not one you can see through your Azure subscription. It falls into a “special” category of SQL Databases that are associated with your tenancy, but not “manageable” like other databases. At least not yet. (The same thing exists for Dynamics 365 Finance & Operations).

Now.. it can be accessed by external users if you as the admin give them rights. Part of creating the Datamart is the creation of the traditional connection string. You can use SSMS or write your own application to query the database.

Using SSMS (as the admin) you can also grant user access. Last I checked it support SQL accounts and probably supports AAD now as well, but I’m not sure on that last part.

Remember, a PBI Datamart is “read-only” for users accessing it. It can only be populated and refreshed by the Power BI service, based on how it was originally created.

LikeLike

Great article!

Datamarts look really promising but in testing the main limitation for us seems to be the speed (or lack of it), there was a recent MS Blog post stating this had been improved but it’s still super slow for the end user.

We tested by duplicating a simple star schema as a traditional dataset and also as a Datamart. The fact table has about 300k rows.

With a single simple matrix visual the traditional dataset version has an instant response, the datamart version lags for 5-10 seconds just drilling down a single row (for the datamart report we have tried connecting via the datamart option, directly to the generated dataset and also the SQL endpoint without any noticeable difference)

If they get the performance issue sorted (or we figure out what we are doing wrong) it would be great to be able to set up template datamarts. Power users could bring in central data assets into a basic (quality assured) datamart which is placed in a library, users could then save a copy and just need to bolt on their extra data items etc.

LikeLike

Phil and Marc,

That’s something I think will be sorted out in the future.

Along with pricing.

My thought is, if Datamarts is actually a SQL Database deployment, then that means customers will eventually be charged based on a SQL Database tier.

AND… it makes sense that we should be able to choose the right tier/size that meets our needs. SQL Database allows me to choose general purpose/compute optimized/and memory optimized VMs and sizes from 4vCores up to silly amounts.

I’ve talked with several MSFTers but they’re not tipping their hat at this point. I think it’s all based on feedback from customers.

LikeLike

I don’t think it will be up to the user to choose a tier. It is a SaaS offering and fully managed. If you need more capacity, my assumption is that the only way will be upgrading the capacity (or auto scale).

But all assumptions… We just have to be patient and time will tell.

–Marc

LikeLike

Pingback: Thoughts on Power BI Datamarts – Curated SQL

Also… Marc….

Great article!

Really well done.

LikeLike

Pingback: What, How, When and Why on Power BI Datamarts [Hill Sprint] – Data Ascend

Pingback: When should you use Power BI Dataflows vs Power BI Datamarts? – Data Ascend

Pingback: Can you refresh a Power BI Datamart Programmatically? – Data – Marc